Jenkins jobs (1)

ORB SLAM2 ros implementation.

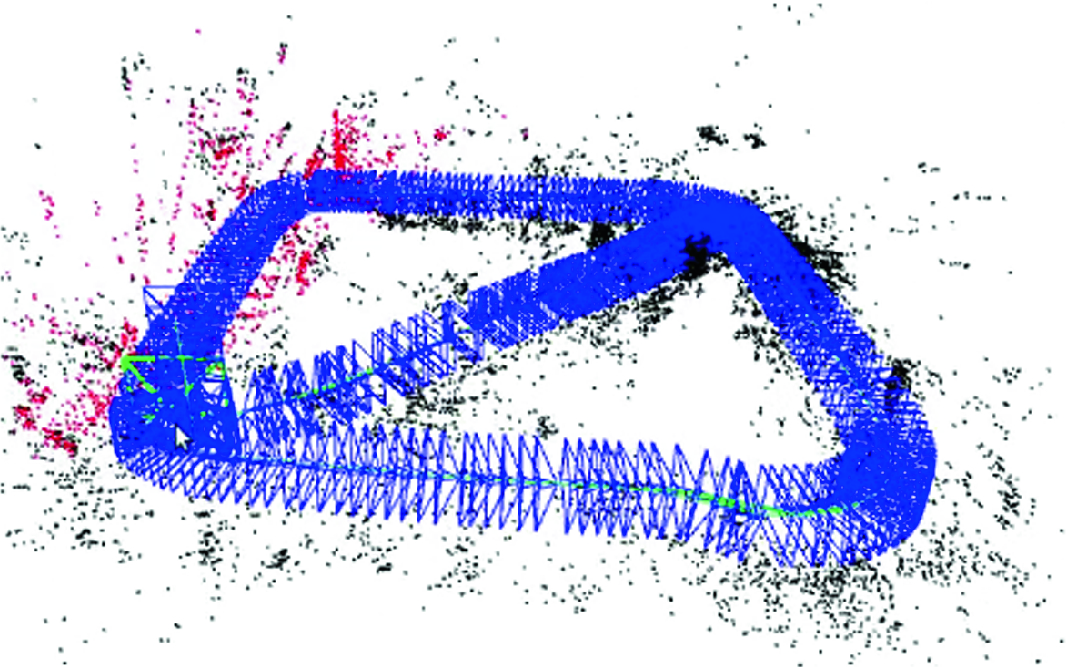

We present ORB-SLAM2 a complete SLAM system for monocular, stereo and RGB-D cameras, including map reuse, loop closing and relocalization capabilities. The system works in real-time on standard CPUs in a wide variety of environments from small hand-held indoors sequences, to drones flying in industrial environments and cars driving around a city. We present ORB-SLAM2 a complete SLAM system for monocular, stereo and RGB-D cameras, including map reuse, loop closing and relocalization capabilities. The system works in real-time on standard CPUs in a wide variety of environments from small hand-held indoors sequences, to drones flying in industrial environments and cars driving around a city. Our back-end based on bundle adjustment with. However, the ORB-SLAM2 is based on the static-world assumption and still has a few shortcomings in handling with dynamic scene problems, so we integrate our method with the ORB-SLAM2 system in order to enhance its robustness and stability in highly dynamic environments.

- Maintainer status: maintained

- Maintainer: Lennart Haller

- Author:

- License: GPLv3

- Source: git https://github.com/appliedAI-Initiative/orb_slam_2_ros.git (branch: master)

Jenkins jobs (1)

ORB SLAM2 ros implementation.

- Maintainer status: maintained

- Maintainer: Lennart Haller

- Author:

- License: GPLv3

- Source: git https://github.com/appliedAI-Initiative/orb_slam_2_ros.git (branch: master)

Contents

- ORB-SLAM2 ROS node

- ROS parameters, topics and services

ORB-SLAM2 Authors:RaulMur-Artal,JuanD.Tardos, J.M.M.Montiel and DorianGalvez-Lopez (DBoW2). The original implementation can be found here.

This is a ROS implementation of the ORB-SLAM2 real-time SLAM library for Monocular, Stereo and RGB-D cameras that computes the camera trajectory and a sparse 3D reconstruction (in the stereo and RGB-D case with true scale). It is able to detect loops and relocalize the camera in real time. This implementation removes the Pangolin dependency, and the original viewer. All data I/O is handled via ROS topics. For vizualization you can use RViz.

Features

- Full ROS compatibility

- Supports a lot of cameras out of the box, such as the Intel RealSense family. See the run section for a list

- Data I/O via ROS topics

- Parameters can be set with the rqt_reconfigure gui during runtime

- Very quick startup through considerably sped up vocab file loading

- Full Map save and load functionality

ROS parameters, topics and services

Parameters

There are three types of parameters right now: static- and dynamic ros parameters and camera settings from the config file. The static parameters are send to the ROS parameter server at startup and are not supposed to change. They are set in the launch files which are located at ros/launch. The parameters are:

- load_map: Bool. If set to true, the node will try to load the map provided with map_file at startup.

- map_file: String. The name of the file the map is saved at.

- settings_file: String. The location of config file mentioned above.

- voc_file:String. The location of config vocanulary file mentioned above.

- publish_pose: Bool. If a PoseStamped message should be published. Even if this is false the tf will still be published.

- publish_pointcloud: Bool. If the pointcloud containing all key points (the map) should be published.

- pointcloud_frame_id: String. The Frame id of the Pointcloud/map.

- camera_frame_id: String. The Frame id of the camera position.

Dynamic parameters can be changed at runtime. Either by updating them directly via the command line or by using rqt_reconfigure which is the recommended way. The parameters are:

- localize_only: Bool. Toggle from/to only localization. The SLAM will then no longer add no new points to the map.

- reset_map: Bool. Set to true to erase the map and start new. After reset the parameter will automatically update back to false.

- min_num_kf_in_map: Int. Number of key frames a map has to have to not get reset after tracking is lost.

Finally, the intrinsic camera calibration parameters along with some hyperparameters can be found in the specific yaml files in orb_slam2/config.

Published topics

The following topics are being published and subscribed to by the nodes:

- All nodes publish (given the settings) a PointCloud2 containing all key points of the map.

- Live image from the camera containing the currently found key points and a status text.

- A tf from the pointcloud frame id to the camera frame id (the position).

Subscribed topics

- The mono node subscribes to /camera/image_raw for the input image.

- The RGBD node subscribes to /camera/rgb/image_raw for the RGB image and

- /camera/depth_registered/image_raw for the depth information.

- The stereo node subscribes to image_left/image_color_rect and

- image_right/image_color_rect for corresponding images.

Services

All nodes offer the possibility to save the map via the service node_type/save_map. So the save_map services are:

- /orb_slam2_rgbd/save_map

- /orb_slam2_mono/save_map

- /orb_slam2_stereo/save_map

ORB-SLAM2 Code (+ Theory) Analysis (1): Enter MONO_EUROC Main Function

tags: ORB-SLAM analysis

table of Contents

To understand the code implementation of a system, always start with the framework of the system, the system framework of the orb-slam2 is shown below:

We can see that Tracking lineCheng Zhong didExtract ORB features, initial position estimation, etc.Then this thread should belong to 'front-end VO' in the SLAM;Local mapping thread There are local bars, we can know that this thread belongs to the backend, mainly do backend optimization and building work;Loop closing threadIt can be judged from the literal meaning to be looped, divided into loop detection and looping correction. Then need to know that the TRACKING thread receives a frame of one frame after the picture starts working, and the keyframe received by the local mapping thread is from the Tracking thread 'Processing', the loop closing thread is also receiving local mapping thread 'further processing refining' of KeyFrame Come work.

note:Local mapping threadStartingKeyframe Operate!

Parameters

There are three types of parameters right now: static- and dynamic ros parameters and camera settings from the config file. The static parameters are send to the ROS parameter server at startup and are not supposed to change. They are set in the launch files which are located at ros/launch. The parameters are:

- load_map: Bool. If set to true, the node will try to load the map provided with map_file at startup.

- map_file: String. The name of the file the map is saved at.

- settings_file: String. The location of config file mentioned above.

- voc_file:String. The location of config vocanulary file mentioned above.

- publish_pose: Bool. If a PoseStamped message should be published. Even if this is false the tf will still be published.

- publish_pointcloud: Bool. If the pointcloud containing all key points (the map) should be published.

- pointcloud_frame_id: String. The Frame id of the Pointcloud/map.

- camera_frame_id: String. The Frame id of the camera position.

Dynamic parameters can be changed at runtime. Either by updating them directly via the command line or by using rqt_reconfigure which is the recommended way. The parameters are:

- localize_only: Bool. Toggle from/to only localization. The SLAM will then no longer add no new points to the map.

- reset_map: Bool. Set to true to erase the map and start new. After reset the parameter will automatically update back to false.

- min_num_kf_in_map: Int. Number of key frames a map has to have to not get reset after tracking is lost.

Finally, the intrinsic camera calibration parameters along with some hyperparameters can be found in the specific yaml files in orb_slam2/config.

Published topics

The following topics are being published and subscribed to by the nodes:

- All nodes publish (given the settings) a PointCloud2 containing all key points of the map.

- Live image from the camera containing the currently found key points and a status text.

- A tf from the pointcloud frame id to the camera frame id (the position).

Subscribed topics

- The mono node subscribes to /camera/image_raw for the input image.

- The RGBD node subscribes to /camera/rgb/image_raw for the RGB image and

- /camera/depth_registered/image_raw for the depth information.

- The stereo node subscribes to image_left/image_color_rect and

- image_right/image_color_rect for corresponding images.

Services

All nodes offer the possibility to save the map via the service node_type/save_map. So the save_map services are:

- /orb_slam2_rgbd/save_map

- /orb_slam2_mono/save_map

- /orb_slam2_stereo/save_map

ORB-SLAM2 Code (+ Theory) Analysis (1): Enter MONO_EUROC Main Function

tags: ORB-SLAM analysis

table of Contents

To understand the code implementation of a system, always start with the framework of the system, the system framework of the orb-slam2 is shown below:

We can see that Tracking lineCheng Zhong didExtract ORB features, initial position estimation, etc.Then this thread should belong to 'front-end VO' in the SLAM;Local mapping thread There are local bars, we can know that this thread belongs to the backend, mainly do backend optimization and building work;Loop closing threadIt can be judged from the literal meaning to be looped, divided into loop detection and looping correction. Then need to know that the TRACKING thread receives a frame of one frame after the picture starts working, and the keyframe received by the local mapping thread is from the Tracking thread 'Processing', the loop closing thread is also receiving local mapping thread 'further processing refining' of KeyFrame Come work.

note:Local mapping threadStartingKeyframe Operate!

Orb Slam2 Pdf

Remaining problem:Place Recognition and MAP What is it? Next interpretation

Orb Slam 2 Pdf

mono_euroc.ccThe main functions of the file are as follows

cd orb_slam/src/ORB_SLAM2 // My program is placed in ORB_SLAM / SRC / ORB_SLAM2

Run the program input:

argv[0] argv[1] argv[2] argv[3] argv[4]

./Examples/Monocular/mono_eurocVocabulary/ORBvoc.txtExamples/Monocular/EuRoC.yaml /home/hltt3838/EuRoC_data/MH_01_easy/mav0/cam0/dataExamples/Monocular/EuRoC_TimeStamps/MH01.txt

analysis:The program is easy to understand, mainlyRead image information, initialization system, tracking images, etc.The thinking map of the program is shown below:

Learning program 1:void LoadImages( )

understanding:

The program is well written, but it is necessary to pay attention to how to read the image according to its own image data. The corresponding time series is based on its image data. The above program name is as follows. :

The following program is converted into a unit: seconds

vTimeStamps.push_back(t/1e9); // Conversion unit: second, specifically see MH01.TXT

Learning program 2: ORB_SLAM2::System SLAM( )

This program isSystem.cc Inside the program, the specific analysis will look at:https://mp.csdn.net/editor/html/115089937

Intelligent Recommendation

ORB-SLAM2 source code interpretation flow chart Initial process System flow 1. Introduction to System Constructor 2. Introduction to TrackMonocular flow chart Initial process First call mono_tum.cc to...

ORB-SLAM2 from theory to implement the code (G): Tracking.cc Detailed Program (in)

My mailbox [email protected], please share! This blog connected to a content, and continue to look at tracking thread. void Tracking :: MonocularInitialization () (StereoInitialization () For ...

Welcome to exchange, my mailbox [email protected] I will write about ORBextractor.cc, ORBmatcher.cc and Frame.cc before writing Tracking.cc's blog. Three feature extraction and matching 1. Theoretica...

ORB-SLAM2 code reader (a)

ORB-SLAM2 code reader (a) Total Code Logic reference:ORB-SLAM2 explain (a) Introduction ORB-SLAM is divided into three threads, respectively, Tracking, LocalMapping and LoopClosing. Three threads are ...

Stored in Examples are example programs based on monocular, binocular, and RGBD. The include folder stores header files, ORB-SLAM2 can be used as a library, and many functions can be called directly T...

More Recommendation

ORB-SLAM2 algorithm analysis and code analysis - introduction-01

Introduction to the ORB-SLAM algorithm ORB-SLAM Advantage Overall framework ORB-SLAM ORB-SLAM is the current state of art SLAM algorithm developed by Raul Mur-Artal and Juan D. Tard ́os from the Unive...

Work will start again after the Spring Festival, come on ヾ(◍°∇°◍)ノ゙ ORB-SLAM2 is popular in the SLAM world. Numerous updated versions are released on github. You have to read about such ...

ORB-SLAM2 source code reading notes 1: download and compile

1 Install dependencies installation:Pangolin Installation: OpenCV Reference: 'OpenCV4 Study Notes 1: Installation on Ubuntu' https://blog.csdn.net/qq_27806947/article/details/104888774 Insta...

Start reading and learning the source code of ORB-SLAM2, here to make a learning record, in addition to supervise your own learning more in-depth. Preface First of all, ORB-SLAM2 is a complete slam sy...

ORB SLAM2 Source Code Interpretation (1): System Process

This article is reproducedDelive: ORB SLAM2 Source Code Interpretation (1): System Process Article catalog First, system code structure Second, the system flow 1. Constructor (System) 1) Initializatio...